The Real Cost of Bad Data: What Every Wholesaler Overlooks

If you’re wholesaling at any real scale, you’ve felt this pain:

You stack a list, skip trace it, upload it… and half the numbers don’t connect

Your team argues about whether a lead is “hot” because the notes are thin or inconsistent

Follow-up gets missed because ownership isn’t clear

Your pipeline looks “full”… but closings feel random

Most wholesalers shrug and call it “normal.”

But bad data isn’t a nuisance. It’s a margin killer.

And the worst part? It doesn’t fail once. It fails everywhere—quietly—until your whole operation feels unstable.

This post breaks down:

Where dirty data actually costs you money,

The five pitfalls most teams keep repeating, and

A simple 3-layer system to fix it (without rebuilding your tech stack).

Bad data doesn’t just waste time — it breaks your deal flow

Most operators only count surface costs: “We wasted a few hours calling bad numbers.”

The real costs hit the three levers that determine whether you scale responsibly or spin your wheels:

1) Speed-to-lead slows down (and speed wins deals)

Dirty records create hesitation:

“Is this the right owner?”

“Did we already contact them?”

“Is this number verified?”

That hesitation reduces speed, and speed is where your edge lives—especially when competitors respond faster and follow-up harder.

2) Conversion drops (because your pipeline becomes a lie)

Dirty data inflates your “opportunity count” with:

duplicates

wrong owners

outdated contact info

incomplete records with zero context

You think you have 300 workable leads. In reality, you may have 120.

3) Trust erodes (inside your team and with sellers)

Internally, bad data causes:

broken handoffs

missed follow-ups

“I thought you handled it” failures

Externally, it’s worse:

wrong names

wrong properties

repeated outreach

conversations that feel careless

Trust is fragile. Dirty data chips away at it call by call.

Bad data creates a compounding tax across your operation:

Duplicate work: two people research the same deal, two people text the same seller

Broken follow-up: a lead is marked “nurture” but has no next action, no date

Wasted marketing spend: mail, SMS, and dials burn budget reaching the wrong contacts

Bad decisions: you reinvest in the wrong lists/channels because KPIs are polluted

Salesforce has put a number on the broader business version of this problem: leaders estimate a meaningful portion of company data is siloed or unusable, and that the most valuable insights often sit inside that inaccessible chunk. (Salesforce)

In wholesaling, that translates to a brutal reality:

You don’t just lose time. You lose momentum.

And momentum is what turns outreach into contracts.

The 5 bad-data pitfalls most wholesaling teams repeat

1) Outdated contact info (data decay)

Contact data degrades constantly. HubSpot cites ~22.5% annual database decay in marketing lists. (HubSpot)

Even if your list was perfect on day one, it won’t stay perfect.

Impact: more dials, more texts, more cost… same results.

2) Duplicates (stacking without dedupe discipline)

Stacking is smart. But stacking without merging rules creates:

double touches (seller annoyance)

inflated KPIs

wasted labor

messy handoffs

Impact: you pay twice to learn the same thing.

3) “Thin” records (no context)

An address + phone number is not a lead. It’s a guess.

If you’re missing:

owner confirmation

occupancy

condition notes

motivation signals

timeline

…your reps are forced to improvise and your follow-up becomes generic.

4) Bad tagging (everything becomes “hot” or “dead”)

Most CRMs become tag graveyards:

40 versions of “follow up”

“hot” with no reason

“dead” with no history

Impact: your best opportunities get buried.

5) No verification loop (errors flow downstream forever)

This is the big one.

If you don’t stop bad data at intake, every downstream activity gets more expensive:

comping

outreach

follow-up

underwriting

reporting

Gartner frames data quality as “fit for priority use cases” (including AI and ML). In wholesaling terms: if your pipeline isn’t usable for execution, it’s not “good data.” (Gartner)

The Dirty Data Domino Effect

Here’s how one bad record turns into lost deals:

Dirty data enters (wrong number, wrong owner, duplicate)

Outreach quality drops (low connect rate, generic messaging)

Follow-up breaks (no next action, unclear ownership)

Pipeline truth collapses (stages don’t reflect reality)

Decisions get worse (you buy more lists instead of fixing the system)

If you want the systems view of how small bottlenecks reduce deal volume, this internal read pairs well:

The fix: a 3-layer system (verification loops + enrichment + smarter tagging)

You don’t need more tools. You need control points.

Layer 1: Verification loops (stop bad data at the door)

Goal: catch errors before they hit outreach.

Practical rules that work:

Auto-flag missing critical fields (owner name, phone, address)

Auto-detect duplicates on import (address + owner match)

Require a “reason” when marking a lead hot/cold (dropdowns beat free-text chaos)

Add a “verified contact” field (yes/no/unknown)

This is QC for your pipeline. Without it, defects ship.

Layer 2: Enrichment (turn thin records into usable intelligence)

Goal: reduce manual research and increase personalization + connect rate.

Enrichment can add:

better contact coverage

property context

owner context

confidence signals (so reps prioritize smarter)

Layer 3: Smarter tagging (create speed and consistency)

Goal: build a shared language so the pipeline becomes trustworthy.

Keep it tight:

6–8 pipeline stages with definitions

8–12 motivation tags total

required “next action” + “next follow-up date”

required “owner” field (one throat to choke)

IBM’s common dimensions of data quality include accuracy, completeness, consistency, uniqueness, and timeliness—those map cleanly to how a wholesaling CRM should behave day-to-day. (IBM)

A 14-day cleanup + 30-day rollout plan built for scaling wholesalers

Days 1–3: Define the “minimum viable truth”

Pick your non-negotiables:

required fields

stage definitions

tag list

verification flags

Days 4–7: Clean + dedupe your current pipeline

merge duplicates

fix missing required fields

archive junk leads (don’t keep poison in the system)

Days 8–14: Implement your intake verification loop

import rules

duplicate detection rules

mandatory “reason” fields

verified contact workflow

Weeks 3–4: Add automation so follow-up stops depending on willpower

enrich on intake

auto-assign by territory/source

auto-trigger follow-up by stage

Where DealScale fits (without adding chaos)

Most wholesalers don’t need “more software.”

They need clean data that turns into action automatically.

DealScale is built to:

import leads from spreadsheets/exports

normalize + enrich records so they’re actually usable

trigger action so follow-up is consistent (and measurable)

Helpful pages:

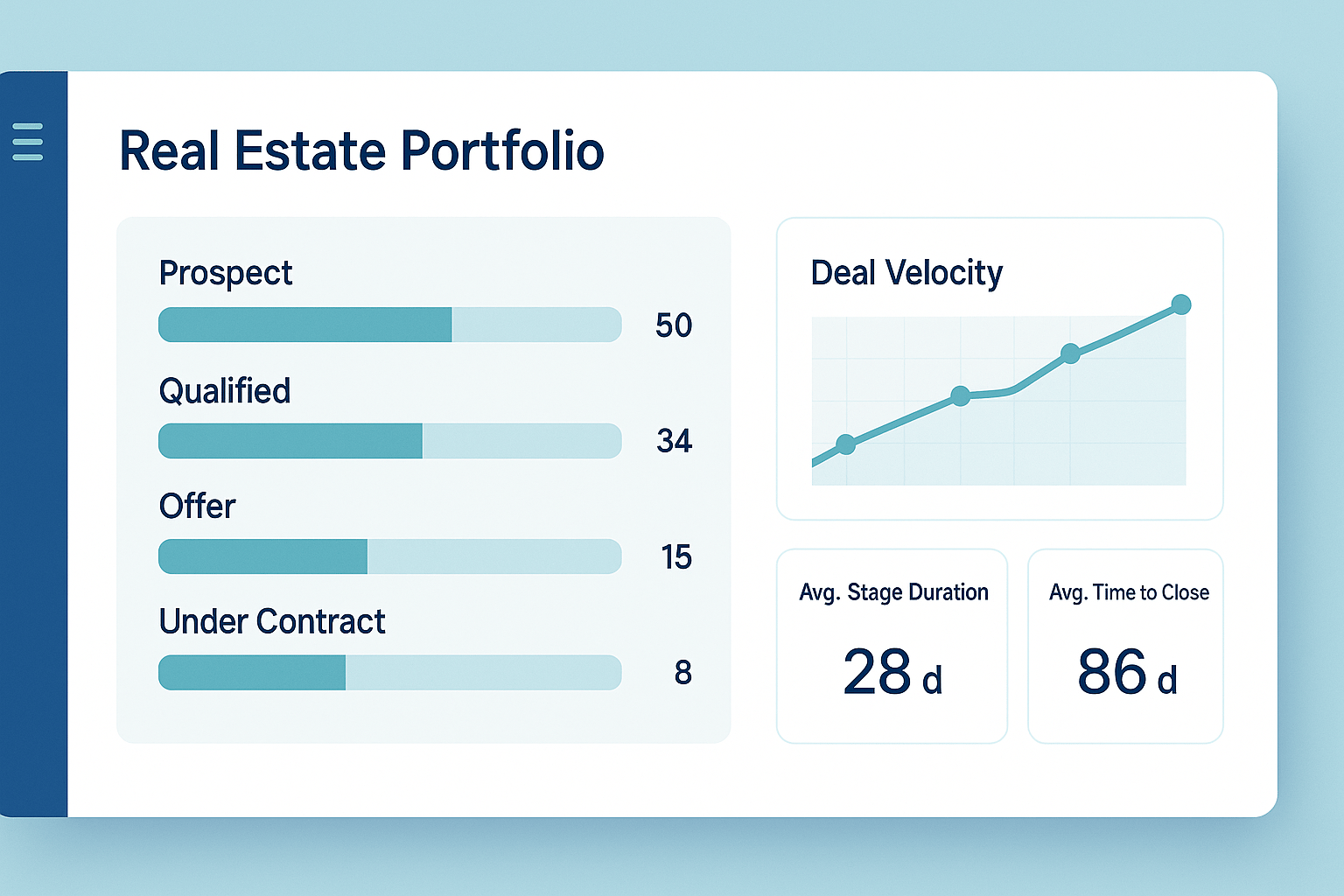

A clean real estate portfolio dashboard showing pipeline stages and deal velocity metrics.

Bottom line: clean data is a profit center

Bad data steals the only resources you can’t replace:

time

attention

seller trust

team momentum

Clean data does the opposite:

raises connect rates

improves conversion

stabilizes forecasting

makes follow-up feel inevitable

If you’re scaling, don’t treat data quality like admin work.

Treat it like what it is:

a compounding advantage.

Editor Notes: External sources used (for fact-checking)

Salesforce “Data and Analytics Trends for 2026” (Salesforce)

HubSpot “Database Decay Simulation” (HubSpot)

Gartner “Data Quality: Best Practices…” (Gartner)

IBM “What is data quality?” (IBM)